Harnessing big data to understand and compare local urban environments, the backbone of healthy, active living, is the dream of many urban planning and public health researchers. At the 2018 Active Living Research conference in Banff, work from over 300 researchers and practitioners from over 30 disciplines frequently focused on using big data to evaluate small, even micro, urban contexts.

Over the last decade, urban transport researchers have realized that macro-level elements of urban form such as street connectivity, density and mixed-use differ from the microscale elements of streetscapes that inform much of the human experience of the street. Microscale features include details such as graffiti, road crossing features, sidewalk buffers, plantings, street furniture, curbs and curb cuts. People who walk, bicycle, or use mobility aids such as scooters, are much more exposed to these details than those in automobiles. But detailed data and software tools to understand and compare microscale elements are lacking. Even today, most microscale data used in research is collected through in-person environment audits similar to the way cartographers collected data a hundred years ago. As a result, evidence-based comparisons and evaluation of micro streetscapes are still a struggle.

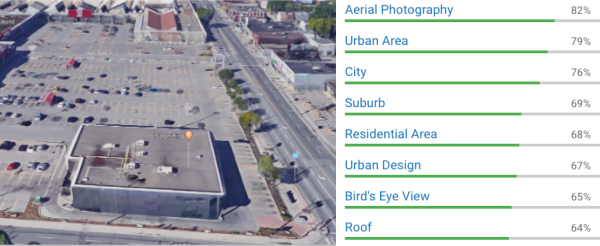

The autonomous vehicle industry is making great strides in image analysis and using machine learning to train artificial intelligence systems to recognize street activity and objects, but these systems tend to be proprietary and are generally focused on the view from a vehicle. Anyone can easily see the micro scale environment from our desks (albeit from the viewpoint of a car), thanks to the massive data collection of Google Streetview, but the platform lacks analysis tools and coding images to compare them requires significant human input. Radical change is underway, however, enabled by crowdsourcing, with human intelligence marketplaces such as Amazon’s Mechanical Turk and the full stack machine learning of Hive.ai (For information on the ethical implications of Mechanical Turk system in other research fields see Fort, K., Adda, G., & Cohen, K. B. (2011). Amazon mechanical turk: Gold mine or coal mine?. Computational Linguistics, 37(2), 413-420).

These tools enable databases of images to be coded at low cost. The human-coded images can then be used to train artificial intelligence systems to recognize features across millions of images. Tools such as Cloud Vision API have great potential for urban researchers but have not yet been “trained” to see what we need them to see. In just one example of new work, researchers at Arizona State University working on the WalkIT Arizona evaluated the accuracy of Amazon’s Mechanical Turks versus expert raters and found a high level of consistency for microscale audits of neighbourhoods (Adams et al, 2018; Phillips et al, 2018). The ability to train AI image recognition systems will lead to new evidence-based understandings and comparisons of urban neighbourhoods. This may eventually allow us to more effectively analyze neighbourhood quality and equity.

While we wait for more of this fine-grained data to become readily available, much can be done by creatively compiling and analyzing the large-scale data sources that we have. At the same Active Living Research conference, TCAT’s Trudy Ledsham presented on Scarborough Cycles, a project that used neighbourhood level data to determine areas with strong potential for successful cycling interventions. Data from Statistics Canada and the Transportation Tomorrow Survey allowed us to pinpoint neighbourhoods where many trips taken were shorter than 5km, where car ownership was low, with many local destinations, and a host of other factors. We then identified community partners to implement cycling uptake strategies based on what we had learned about the local context. Despite the fact the neighbourhood identified had a bike lane removed in the recent past due to pushback from drivers, the program has been wildly successful, reaching over 2,000 participants in the first two years. The results demonstrate the power of adopting a targeted, data-based approach, rather than a broad, one-size fits all campaign to encourage cycling uptake.

References Active Living Research Conference 2018

Crowdsourcing microscale audits of pedestrian streetscapes: Amazon MTurkers versus expert raters

M.A. Adams, C.B. Phillips, J.C. Hurley, H. Hook, W. Zhu, E. Western, T.Y. Yu

Arizona State University, USA

Combining crowdsourcing and Google Street View to assess microscale features supportive of physical activity: Reliability of online crowdsourced ratings

C.B. Phillips, M.A. Adams, J.C. Hurley, H. Hook, W. Zhu, E. Western, T.Y. Yu

Arizona State University, USA

Scarborough cycles: Building bike culture beyond downtown

T. Ledsham, B. Savan, N. Smith Lea

University of Toronto, Canada, & The Centre for Active Transportation, Canada

Other research presented at Active Living Research Conference 2018 on big data and physical activity:

Crowdsourcing walking and cycling route data for injury research: the interactive pedestrian injury mapper

S.J. Mooney, A.G. Rundle

University of Washington, Seattle, USA, Columbia University, USA

The future of active living big data: validity and reliability of wearable activity trackers

H.A. Yates, D.R. Winslow, J. Orlosky, O. Ezenwoye , G.M. Besenyi

Augusta University, USA, & Kansas State University, USA

Measuring walkability of neighbourhoods for population health research in Canada

N.A. Ross1, R. Wasfi, T. Herrmann

McGill University, Canada, & Centre de recherche du Centre hospitalier de l’Université de Montréal, Canada

A novel approach to investigate the impact of the built environment on physical activity among young adults

B. Assemi, B. Zapata D., C. Boulange

University of Queensland, Australia

Other projects underway in Canada:

The SMART Study – Saskatchewan, Let’s Move And Map Our Activity! https://www.smartstudysask.com/about

Team Interact- The INTErventions, Research, and Action in Cities Team http://www.teaminteract.ca/

Other research of interest

Gebru, T., Krause, J., Wang, Y., Chen, D., Deng, J., Aiden, E. L., & Fei-Fei, L. (2017). Using deep learning and google street view to estimate the demographic makeup of the us. arXiv preprint arXiv:1702.06683.